Leaky Rectified Linear Unit (Leaky ReLU) is a variant of the ReLU activation function that addresses the "dying ReLU" problem. The "dying ReLU" problem occurs when ReLU neurons become inactive for certain inputs during training, resulting in those neurons always outputting zero and not learning anything further.

Leaky ReLU introduces a small, non-zero slope for negative inputs, allowing the neurons to remain active even when the input is negative. The mathematical definition of Leaky ReLU is as follows:

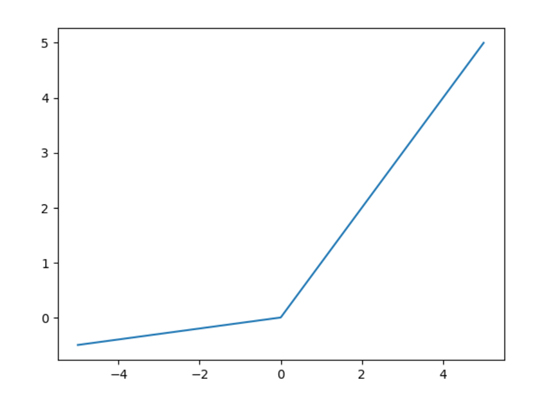

f(x) = max(a*x, x)

Where:

x is the input to the function, which can be a single value or a vector (in the case of neural networks, it is usually the weighted sum of inputs to a neuron).

max takes the maximum value between a*x and x.

a is a small positive constant, usually a small fraction (e.g., 0.01).

When x is positive, Leaky ReLU behaves like the standard ReLU (f(x) = x). However, when 'x' is negative, it introduces a small negative slope determined by the value of a*x, which ensures that the neuron remains active and continues to learn, even for negative inputs.

The benefits of Leaky ReLU include:

- Avoiding the "dying ReLU" problem: The small, non-zero slope for negative inputs prevents neurons from becoming inactive during training, promoting better learning and preventing the saturation of neurons.

- Simplicity and computational efficiency: Like the standard ReLU, Leaky ReLU is computationally efficient and easy to implement.

In practice, Leaky ReLU is commonly used as an alternative to the standard ReLU activation function, especially when training very deep neural networks or models where the "dying ReLU" problem is likely to occur. However, the choice of activation function depends on the specific problem and architecture, and experimentation is often necessary to determine which activation function works best for a particular task. Other variants of ReLU, such as Parametric ReLU (PReLU), also allow the slope for negative inputs to be learned during training, offering more flexibility in the model's architecture.

Python Implementation

To implement the Leaky ReLU function in the python programming language, all you have to do is write the following code.

The result of running this code is shown in the original image at the top of the article. You can run the code in the following colab notebook: Leaky ReLU activation function

No comments:

Post a Comment