Data cleaning and preprocessing are crucial steps in the data analysis process. They involve identifying and addressing issues and inconsistencies in the raw data to ensure its quality, accuracy, and suitability for further analysis. Proper data cleaning and preprocessing enhance the reliability and effectiveness of data analysis, machine learning models, and other data-driven tasks.

The data cleaning and preprocessing process typically include the following steps:

- Handling Missing Data: Identify and handle missing values in the dataset. Missing data can be filled using imputation techniques, removing rows or columns with missing values, or using advanced imputation methods like k-nearest neighbors or regression imputation.

- Handling Outliers: Outliers are data points that significantly deviate from the rest of the data. They can skew statistical analyses and machine learning models. Identify and deal with outliers appropriately, such as removing them, transforming them, or treating them as missing values.

- Data Standardization/Normalization: If the data features have different scales, it is beneficial to standardize or normalize them to a common scale. Standardization scales the data to have a mean of 0 and a standard deviation of 1, while normalization scales the data to a specific range, such as [0, 1].

- Encoding Categorical Data: Machine learning models typically require numeric inputs. Therefore, categorical variables need to be encoded into numerical representations using techniques like one-hot encoding, label encoding, or binary encoding.

- Removing Redundant Features: Identify and remove features that do not contribute significantly to the analysis or introduce multicollinearity issues. Reducing the number of features can improve the model's efficiency and interpretability.

- Feature Engineering: Create new features or transformations of existing features to provide more meaningful and informative representations of the data.

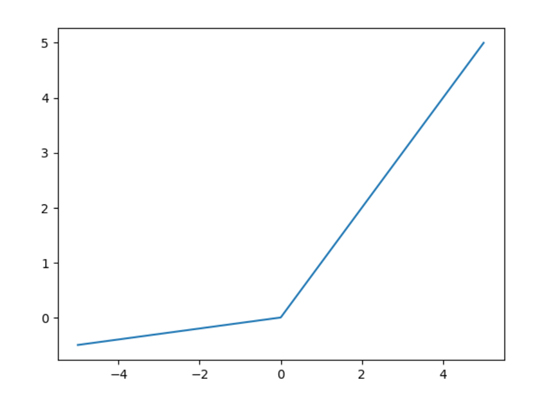

- Handling Skewed Data: Address skewed distributions in the data using techniques like log transformation or box-cox transformation to improve the model's performance.

- Data Integration: Combine multiple datasets or data sources, if needed, to create a more comprehensive dataset for analysis.

- Data Type Conversion: Ensure that the data is in the correct data type for analysis and modeling.

- Data Partitioning: Split the dataset into training, validation, and test sets for model training and evaluation.

- Data Visualization: Visualize the data at different stages of cleaning and preprocessing to understand the effects of the transformations and to detect any further issues.

Data cleaning and preprocessing can be iterative processes, and different datasets may require specific techniques and approaches based on the nature of the data and the analysis goals. Properly cleaned and preprocessed data lays the foundation for accurate and meaningful data analysis and modeling, leading to more reliable insights and predictions.